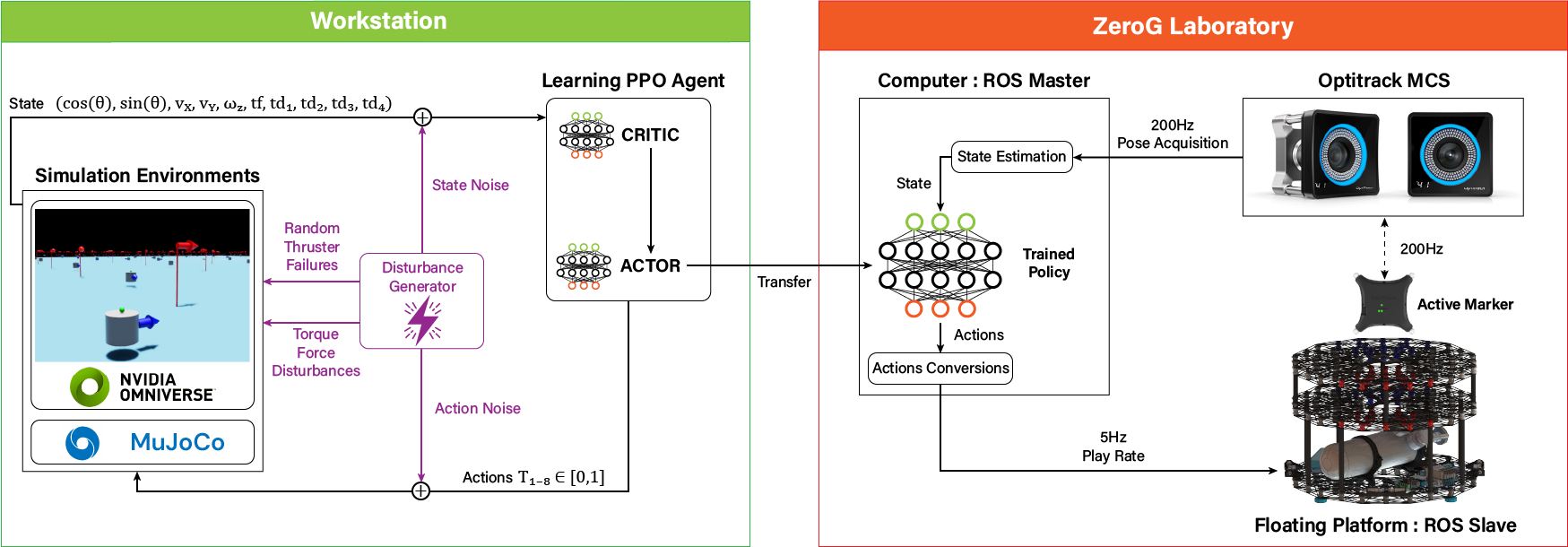

Framework Overview

Framework Employed for Training and Evaluation: On the left, we depict the agent’s interaction during both training and evaluation phases with the simulation environments, highlighting the incorporation of disturbances in the loop. On the right, we illustrate the deployment of the trained policy, while performing open-loop control on the real FP system.